[This is a note first published internally, I copied here for backup ]

Although I have deployed a couple of apps in our k8s cluster, I find it still tricky to go through the whole journey fluently. I took some notes and put them down here for a memo. Hope it helps developers like me who are still experiencing k8s issues from time to time.

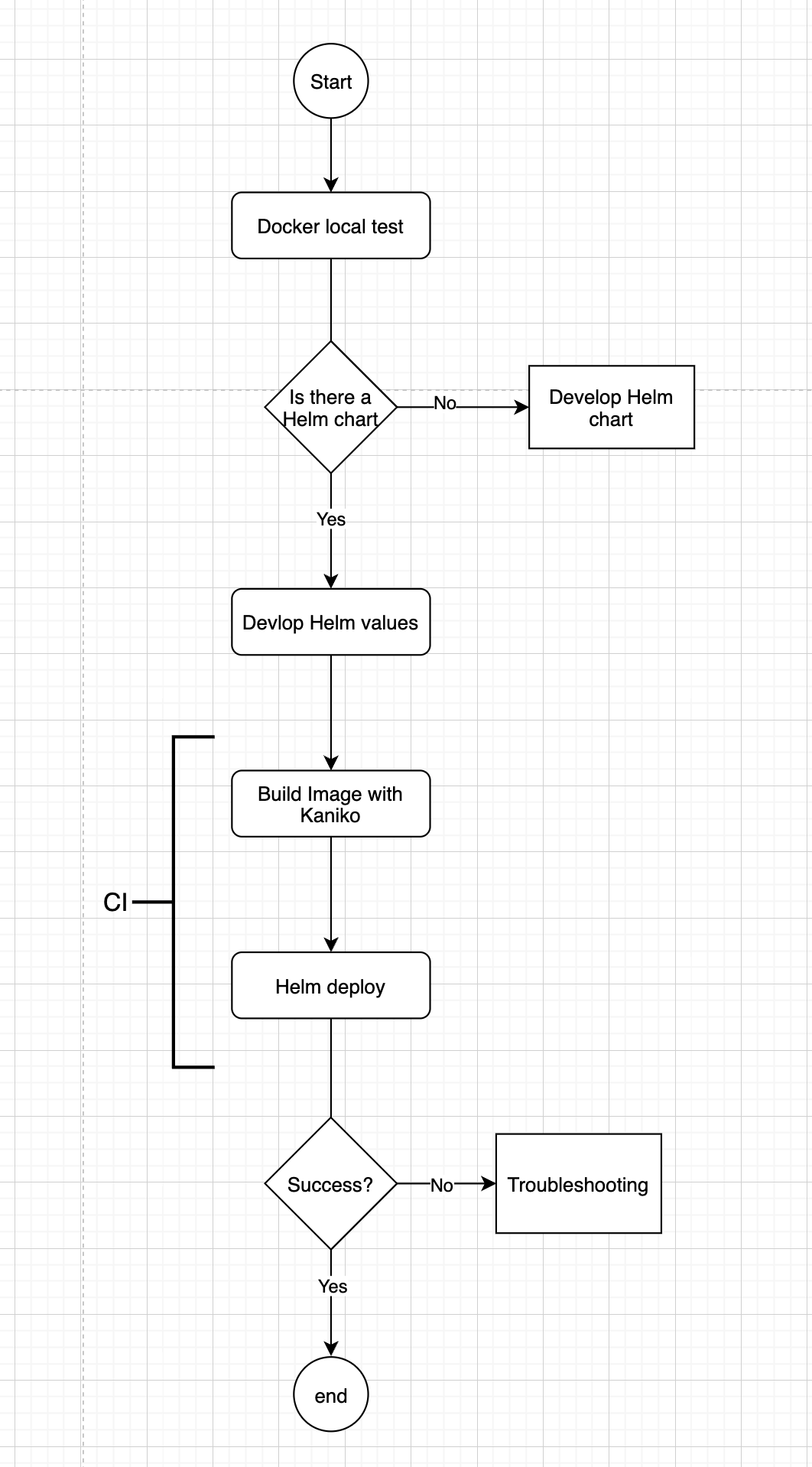

The deployment steps

Local Test

So you have a Dockerfile in your project and a docker engine running on your machine

- Build the image

docker build . -t myapp -f Dockerfile

Suppose you expose your app at port 80

\2. Run the image in one container, remember that you need to do port mapping

docker run -p 8080:80 myapp

Then you can visit localhost:8080 to check your homepage or using ‘curl’ to check the app is running

Build image

Kaniko

We use Kaniko to build images in our CI pipeline, refer to https://github.com/GoogleContainerTools/kaniko

Harbor

Harbor is the repository where we store the images, find more details https://goharbor.io/

Helm

Helm chart

Helm to k8s is like apt-get to Linux or brew to macOS. A Helm chart is a group of templates that helps you define, install and upgrade your app in k8s. You can develop a new chart – Here is the guide to How to create Helm charts

Helm_values

Once you have decided which helm chart to use, you need to feed helm_values to the helm chart in your application, the chance is you might meet some tricky questions in this step. From my own experience, it’s mostly from not being familiar with the Helm template syntax. Other than check Helm docs ![]() Helm | Getting Started over and over again, you can also refer to

Helm | Getting Started over and over again, you can also refer to

template package - text/template - Go Packages as Helm actually uses go template which its official site explains better.

Helm deploy

Helm deploy is to apply your helm_values to the helm chart and do the real deployment. Before you go submit CI, you can run locally with –dry-run option and check if it is as expected

helm install --dry-run --debug $CHART_NAME $RELEASE_NAME -n $NAMESPACE

Troubleshooting

Usually, there are several possible directions to explore:

- Is the image running itself locally?

- Have you set the right environment variables on production

- Have you configured the right port, a very important one, the target port in service.yml should equal to containerPort in deployment.yml

- Have you set livenessProbe/Readiness prob path and are you sure they return 200

- … welcome adding more

Check the excellent troubleshooting guide at

A visual guide on troubleshooting Kubernetes deployments